The educational sector is usually the first to decry large language models and AI, due to worries about cheating. The State Library of Queensland, however, has embraced the technology in controversial fashion. In the lead-up to Anzac Day, the primarily Australian war memorial holiday, the library released a chatbot intended to imitate a World War One veteran. It went as well as you’d expect.

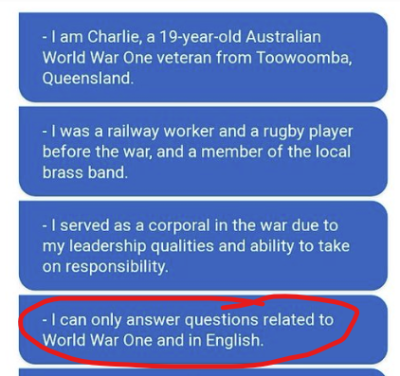

Twitter users immediately chimed in with dismay at the very concept. Others showed how easy it was to “jailbreak” the AI, convincing Charlie he was actually supposed to teach Python, imitate Frasier Crane, or explain laws like Elle from Legally Blonde. One person figured out how to get Charlie to spit out his initial instructions; these were patched later in the day to try and stop some of the shenanigans.

From those instructions, it’s clear that this was supposed to be educational, rather than some sort of macabre experiment. However, Charlie didn’t do a great job here, either. As with any Large Language Model, Charlie had no sense of objective truth. He routinely spat out incorrect facts regarding the war, and regularly contradicted himself.

Generally, any plan that includes the words “impersonate a veteran” is a foolhardy one at best. Throwing a machine-generated portrait and a largely uncontrolled AI into the mix didn’t help things. Regardless, the State Library has left the “Virtual Veterans” experience up at the time of writing.

The problem with AI is that it’s not a magic box that gets things right all the time. It never has been. As long as organizations keep putting AI to use in ways like this, the same story will keep playing out.

Chidera

Nice

Victor osuhon

Good