Robotics kits like the Screwless/Screwed Modular Assemblable Robotic System (SMARS) are great tools for learning more about how electronics, mechanics, and software can combine to perform useful tasks in the physical world. And in his latest project, Edge Impulse’s senior embedded software engineer Dmitry Maslov shows how he was able to take this platform and give it both speech recognition and Wi-Fi control capabilities using an Arduino Nano RP2040 Connect.

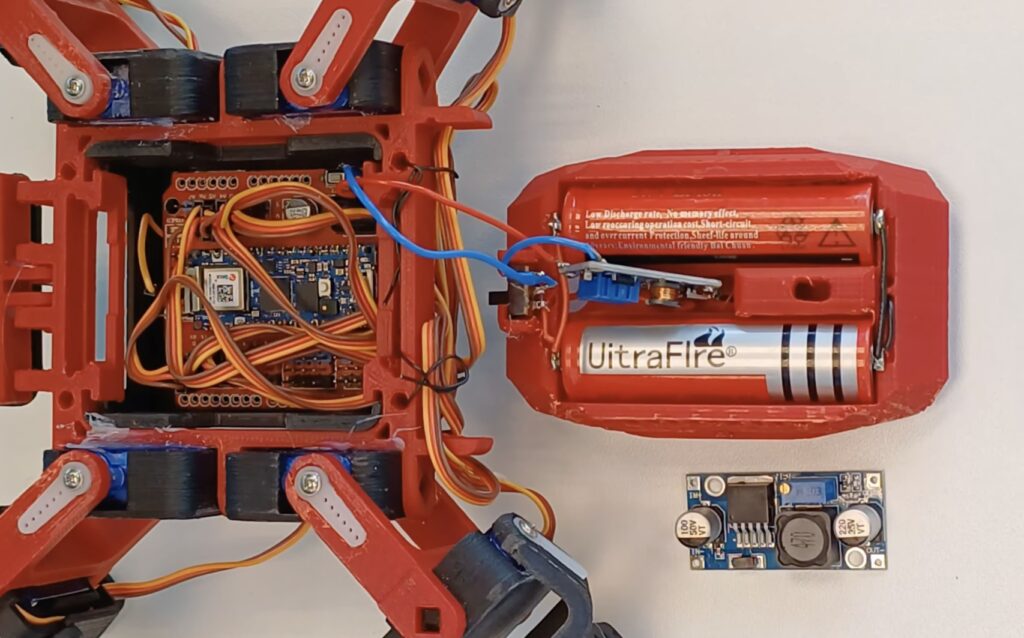

Constructed from an array of 3D-printed parts and eight servo motors, the SMARS Quad Mod robot is a small, blocky quadruped that uses two LiPo battery cells, a step-down converter, and an IO expansion board to move based on simple directional commands such as “forward” and “left,” among others. Normally, these would come from an IR remote or a preprogrammed sequence, but by leveraging AI at the edge, it can respond in real-time to audible commands. And to achieve this, Maslov imported a dataset containing many samples of people saying directions along with background noise before training a keyword spotting model on it.

Once exported as a C++ library, the model was embedded into the robot’s sketch. Thanks to the RP2040’s dual-core architecture, the first core continuously reads new data from the microphone, performs inferencing, and sends the result to the second core when available. Then when the value is received, the other core maps the direction to a sequence of leg movements.

For more information about this project, you can check out Maslov’s tutorial on Hackster.io and see its dataset/model here in the Edge Impulse Studio.