A team at Google has spent a lot of time recently playing table tennis, purportedly only for science. Their goal was to see whether they could construct a robot which would not only play table tennis, but even keep up with practiced human players. In the paper available on ArXiv, they detail what it took to make it happen. The team also set up a site with a simplified explanation and some videos of the robot in action.

In the end, it took twenty motion-capture cameras, a pair of 125 FPS cameras, a 6 DOF robot on two linear rails, a special table tennis paddle, and a very large annotated dataset to train multiple convolutional network networks (CNN) on to analyze the incoming visual data. This visual data was then combined with details like knowledge of the paddle’s position to churn out a value for use in the look-up table that forms the core of the high-level controller (HLC). This look-up table then decides which low-level controller (LLC) is picked to perform a certain action. In order to prevent the CNNs of the LLCs from ‘forgetting’ the training data, a total of 17 different CNNs were used, one per LLC.

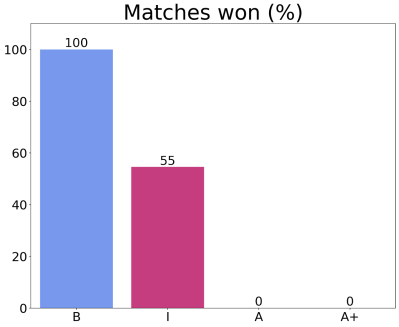

The robot was tested with a range of players from a local table tennis club which made clear that while it could easily defeat beginners, intermediate players pose a serious threat. Advanced players completely demolished the table tennis robot. Clearly we do not have to fear our robotic table tennis playing overlords just yet, but the robot did receive praise for being an interesting practice partner.