[ad_1]

![]()

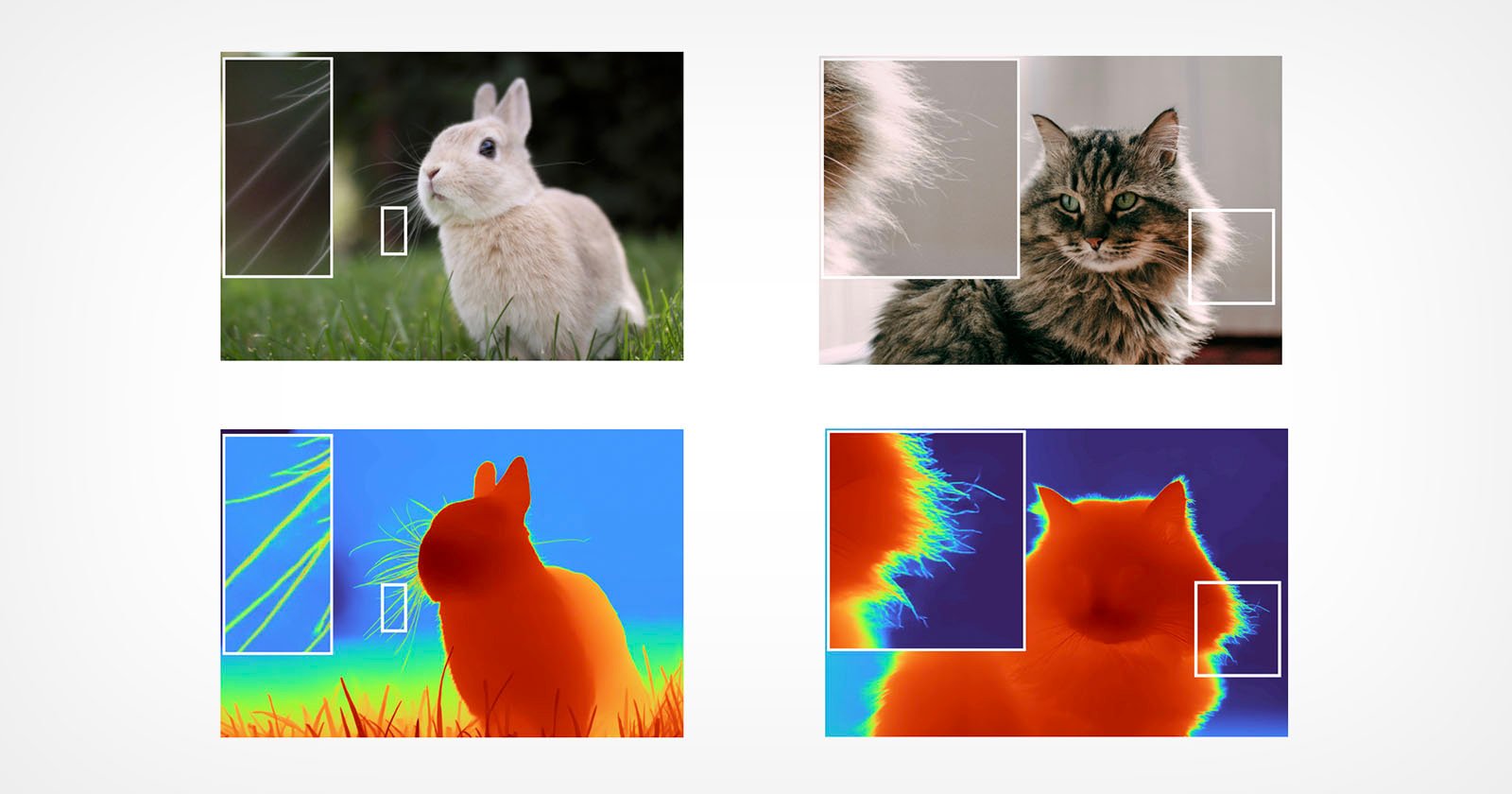

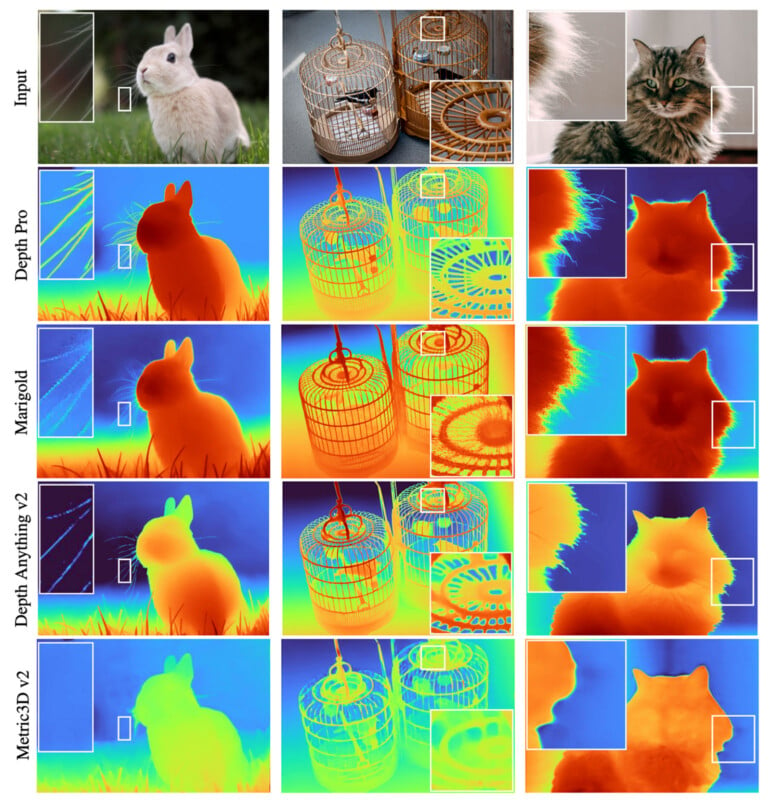

Apple’s Machine Learning Research team created a new AI model that promises significant improvements concerning computer vision models and how they analyze three-dimensional within a two-dimensional image.

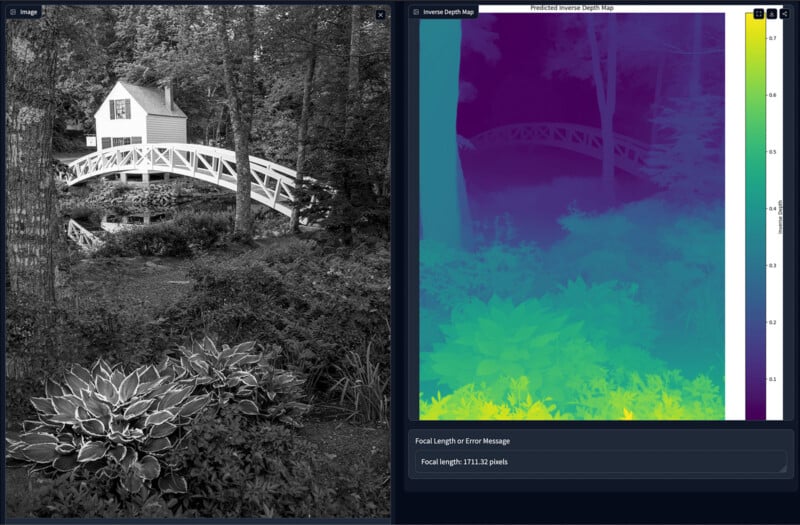

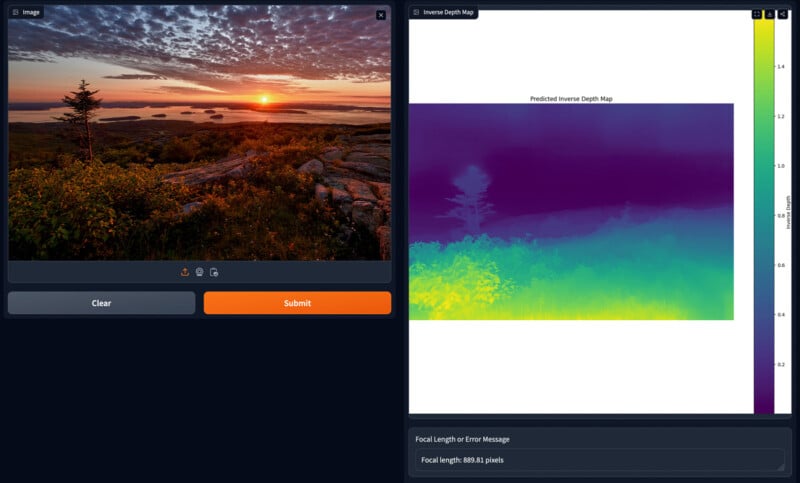

The new AI model, as reported by VentureBeat, is called Depth Pro and is detailed in a new paper, “Depth Pro: Sharp Monocular Metric Depth in Less Than a Second.” Depth Pro promises to create sophisticated 3D depth maps from individual 2D images quickly. The paper’s abstract explains that the model can make a 2.25-megapixel depth map from an image in 0.3 seconds using a consumer-grade GPU.

Although devices like Apple’s latest iPhones can create depth maps using on-device sensors, most still images have no accompanying real-world depth data. However, depth maps for these images can be highly beneficial for numerous applications, including during routine image editing. For example, if someone wants to edit only a subject or introduce an artificial “lens” blur to a scene, a depth map can help software create precise masks. A depth map model can also help with AI image generation, as a deep understanding of depth maps can help a synthesis model produce more realistic results.

As the Apple researchers — Aleksei Bochkovskii, Amaël Delaunoy, Hugo Germain, Marcel Santos, Yichao Zhou, Stephan R. Richter, and Vladlen Koltun — explain, an effective zero-shot metric monocular depth estimation model must swiftly produce accurate, high-resolution results to be helpful. A sloppy depth map is of little value.

“Depth Pro produces high-resolution metric depth maps with high-frequency detail at sub-second runtimes. Our model achieves state-of-the-art zero-shot metric depth estimation accuracy without requiring metadata such as camera intrinsics and traces out occlusion boundaries in unprecedented detail, facilitating applications such as novel view synthesis from single images ‘in the wild,’” Apple researchers explain. However, the team acknowledges some limitations, including trouble dealing with translucent surfaces and volumetric scattering.

As VentureBeat explains, beyond photo editing and novel synthesis applications, a depth map model could also prove useful for augmented reality (AR) applications, wherein virtual objects must be accurately placed within physical space. The Depth Pro model is adept with both relative and absolute depth, which is vital for many use cases.

People can test Depth Pro for themselves on Hugging Face and learn much more about the inner workings of the depth model by reading Apple’s new research paper.

Image credits: Apple Machine Learning Research

[ad_2]