Programming languages are generally defined as a more human-friendly way to program computers than using raw machine code. Within the realm of these languages there is a wide range of how close the programmer is allowed to get to the bare metal, which ultimately can affect the performance and efficiency of the application. One metric that has become more important over the years is that of energy efficiency, as datacenters keep growing along with their power demand. If picking one programming language over another saves even 1% of a datacenter’s electricity consumption, this could prove to be highly beneficial, assuming it weighs up against all other factors one would consider.

There have been some attempts over the years to put a number on the energy efficiency of specific programming languages, with a paper by Rui Pereira et al. from 2021 (preprint PDF) as published in Science of Computer Programming covering the running a couple of small benchmarks, measuring system power consumption and drawing conclusions based on this. When Hackaday covered the 2017 paper at the time, it was with the expected claim that C is the most efficient programming language, while of course scripting languages like JavaScript, Python and Lua trailed far behind.

With C being effectively high-level assembly code this is probably no surprise, but languages such as C++ and Ada should see no severe performance penalty over C due to their design, which is the part where this particular study begins to fall apart. So what is the truth and can we even capture ‘efficiency’ in a simple ranking?

Defining Energy Efficiency

At its core, ‘energy efficiency’ is pretty simple to define: it’s the total amount of energy required to accomplish a specific task. In the case of a software application, this means the whole-system power usage, including memory, disk and processor(s). Measuring the whole-system power usage is also highly relevant, as not every programming and scripting language taxes these subsystems in the same way. In the case of Java, for example, its CPU usage isn’t that dissimilar from the same code written in C, but it will use significantly more memory in the process of doing so.

Two major confounding factors when it comes to individual languages are:

- Idiomatic styles versus a focus on raw efficiency.

- Native language features versus standard library features.

The idiomatic style factor is effectively some kind of agreed-upon language usage, which potentially eschews more efficient ways of accomplishing the exact same thing. Consider here for example C++, and the use of smart pointers versus raw pointers, with the former being part of the Standard Template Library (STL) instead of a native language feature. Some would argue that using an STL ‘smart pointer’ like a unique_ptr or auto_ptr is the idiomatic way to use C++, rather than the native language support for raw pointers, despite the overhead that these add.

A similar example is also due to C++ being literally just an extension to C, namely that of printf() and similar functions found in the <cstdio> standard library header. The idiomatic way to use C++ is to use the stream-based functions found in the <iostream> header, so that instead of employing low-level functions like putc() and straightforward formatted output functions like printf() to write this:

printf("Printing %d numbers and this string: %s.\n", number, string);

The idiomatic stream equivalent is:

std::cout << "Printing " << number << " numbers and this string: " << string << "." << std::endl;

Not only is the idiomatic version longer, harder to read, more convoluted and easier to get formatting wrong with, it is internally also significantly more complicated than a simple parse-and-replace and thus causes more overhead. This is why C++20 decided to double-down on stream formatting and fudging in printf-like support with std::format and other functions in the new <format> header. Because things can always get worse.

Know What You’re Measuring

At this point we have defined what energy efficiency with programming languages is, and touched upon a few confounding factors. All of this leads to the golden rule in science: know what you’re measuring. Or in less fanciful phrasing: ‘garbage in, garbage out’, as conclusions drawn from data using flawed assumptions truly are a complete waste of anyone’s time. Whether it was deliberate, due to wishful thinking or a flawed experimental setup, the end result is the same: a meticulously crafted document that can go straight into the shredder.

In this particular comparative analysis, the pertinent question is whether the used code is truly equivalent, as looking across the papers by Rui et al. (2017, 2021), Gordillo et al. (2024) or even a 2000 paper by Lutz Prechelt all reveal stark differences between the results, with seemingly the only constant being that ‘C is pretty good’, while a language such as C++ ends up being either very close to C (Gordillo et al., Prechelt) or wildly varying in tests (Rui et al.), all pointing towards an issue with the code being used, as power usage measurement and time measurement is significantly more straightforward to verify.

In the case of the 2021 paper by Rui et al., the code examples used come from Rosetta Code, with the code-as-used also provided on GitHub. Taking as example the Hailstone Sequence, we can see a number of fascinating differences between the C, C++ and Ada versions, particularly as it pertains to the use of console output and standard library versus native language features.

The C version of the Hailstone Sequence has two printf() statements, while the C++ version has no fewer than five instances of std::cout. The Ada version comes in at two put(), two new_line (which should be merged with put_line) and one put_line. This difference in console output is already a red flag, even considering that when benchmarking you should never have console output enabled as this draws in significant parts of the operating system, with resulting high levels of variability due to task scheduling, etc.

The next red flag is that while the Ada and C versions uses the native array type, the C++ version uses std::vector, which is absolutely not equivalent to an array and should not be used if efficiency is at all a concern due to the internal copying and house-keeping performed by the std::vector data structure.

If we consider that Rosetta Code is a communal wiki that does not guarantee that the code snippets provided are ‘absolutely equivalent’, that means that the resulting paper by Rui et al. is barely worth the trip to the shredder and consequently a total waste of a tree.

Not All Bad

None of this should come as a surprise, of course, as it is well-known (or should be) that C++ produces the exact same code as C unless you use specific constructs like RTTI or the horror show that is C++ exceptions. Similarly, Ada code with similar semantics as C code should not show significant performance differences. The problem with many of the ‘programming language efficiency’ studies is simply that they take a purported authoritative source of code without being fluent in the chosen languages, run them in a controlled environment and then draw conclusions based on the mangled garbage that comes out at the end.

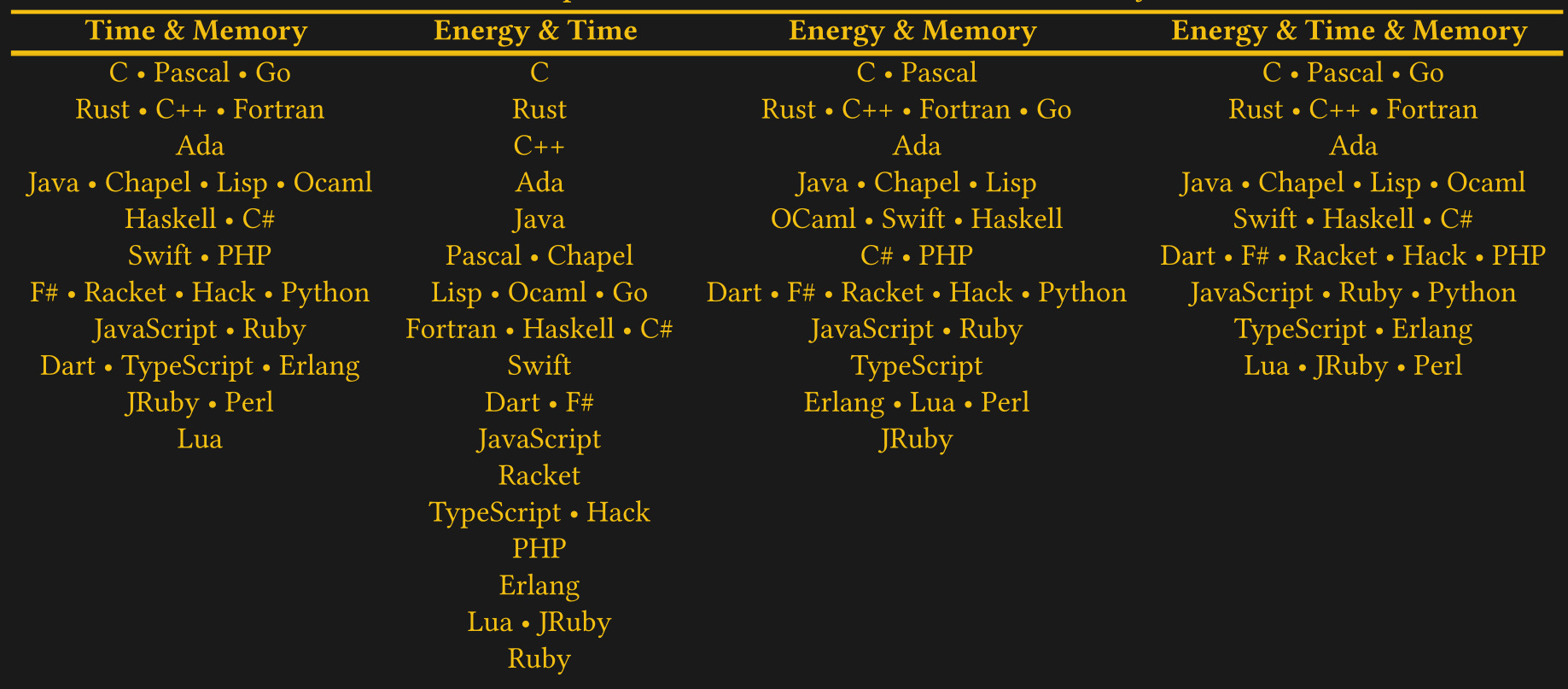

That said, there are some conclusions that can be drawn from the fancy-but-horrifically-flawed tables, such as how comically inefficient scripting languages like Python are. This was also the take-away by Bryan Lunduke in a recent video when he noted that Python is 71 times slower and uses 75 times more energy based on the Rui et al. paper. Even if it’s not exactly 71 times slower, Python is without question a total snail even among scripting languages, where it trades blows with Perl, PHP and Ruby at the bottom of every ranking.

The take-away here is thus perhaps that rather than believing anything you see on the internet (or read in scientific papers), it pays to keep an open mind and run your own benchmarks. As eating your own dogfood is crucial in engineering, I can point to my own remote-procedure call (NymphRPC) library in C++ on which I performed a range of optimizations to reduce overhead. This mostly involved getting rid of std::string and moving to a zero-copy system involving C-isms like memcpy and every bit of raw pointer arithmetic and bit-wise operators goodness that is available.

The result for NymphRPC was a four-fold increase in performance, which is probably a good indication of how much performance you can gain if you stick close to C-style semantics. It also makes it obvious how limited these small code snippets are, as with a real application you also deal with cache access, memory alignment and cache eviction issues, all of which can turn a seemingly efficient approach into a sluggish affair.