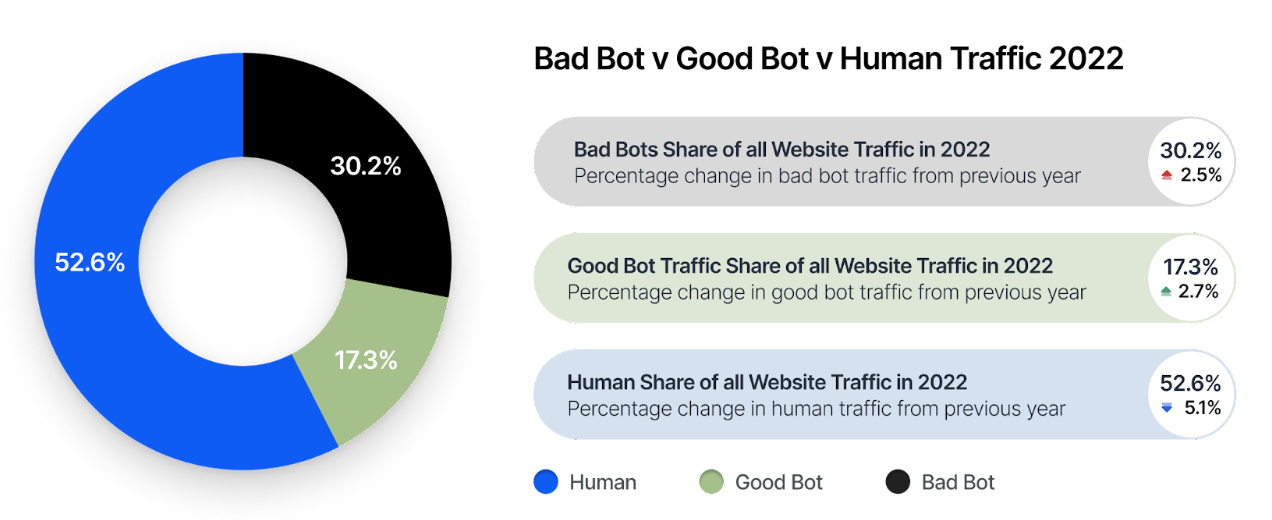

Automation has been a part of the Internet since long before the appearance of the World Wide Web and the first web browsers, but it’s become a significantly larger part of total traffic the past decade. A recent report by cyber security services company Imperva pins the level of automated traffic (‘bots’) at roughly fifty percent of total traffic, with about 32% of all traffic attributed to ‘bad bots’, meaning automated traffic that crawls and scrapes content to e.g. train large language models (LLMs) and generate automated content as well as perform automated attacks on the countless APIs accessible on the internet.

According to Imperva, this is the fifth year of rising ‘bad bot’ traffic, with the 2023 report noting again a few percent increase. Meanwhile ‘good bot’ traffic also keeps increasing year over year, yet while these are not directly nefarious, many of these bots can throw off analytics and of course generate increased costs for especially smaller websites. Most worrisome are the automated attacks by the bad bots, which ranges from account takeover attempts to exploiting vulnerable web-based APIs. It’s not just Imperva who is making these claims, the idea that automated traffic will soon destroy the WWW has floated around since the late 2010s as the ‘Dead Internet theory‘.

Although the idea that the Internet will ‘die’ is probably overblown, the increase in automated traffic makes it increasingly harder to distinguish human-generated content and human commentators from fake content and accounts. This is worrisome due to how much of today’s opinions are formed and reinforced on e.g. ‘social media’ websites, while more and more comments, images and even videos are manipulated or machine-generated.

Zita boo

Interesting