The mere presence of a flame in a controlled environment, such as a candle, is perfectly acceptable, but when tasked with determining if there is cause for alarm solely using vision data, embedded AI models can struggle with false positives. Solomon Githu’s project aims to lower the rate of incorrect detections with a multi-input sensor fusion technique wherein image and temperature data points are used by a model to alert if there’s a potentially dangerous blaze.

Gathering both kinds of data is the Arduino TinyML Kit’s Nano 33 BLE Sense. Using the kit, Githu could capture a wide variety of images thanks to the OV7675 camera module and temperature information with the Nano 33 BLE Sense’s onboard HTS221 sensor. After exporting a large dataset of fire/fire-less samples alongside a range of ambient temperatures, he leveraged Google Colab to train the model before importing it into the Edge Impulse Studio. In here, the model’s memory footprint was further reduced to fit onto the Nano 33 BLE Sense.

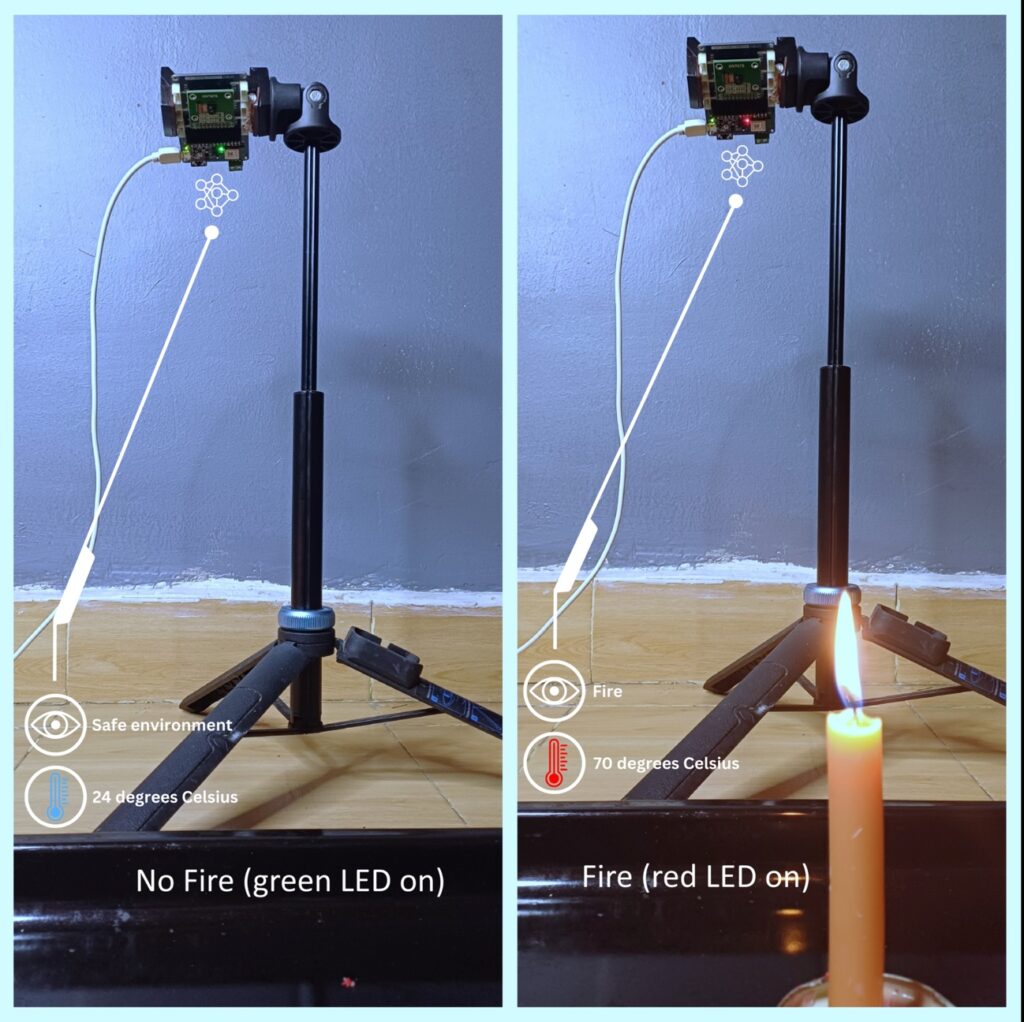

The inferencing sketch polls the camera for a new frame, and once it has been resized, its frame data, along with a new sample from the temperature sensor, are merged and sent through the model which outputs either “fire” or “safe_environment”. As detailed in Githu’s project post, the system accurately classified several scenarios in which a flame combined with elevated temperatures resulted in a positive detection.