Media formats have come a long way since the early days of computing. Once upon a time, the very idea of even playing live audio was considered a lofty goal, with home computers instead making do with simple synthesizer chips instead. Eventually, though, real audio became possible, and in turn, video as well.

But what of the formats in which we store this media? Today, there are so many—from MP3s to MP4s, old-school AVIs to modern *.h264s. Senior software engineer Ben Combee came down to the 2023 Hackaday Supercon to give us all a run down of modern audio and video formats, and how they’re best employed these days.

Vaguely Ironic

Before we dive into the meat of the talk, it’s important we acknowledge the elephant in the room. Yes, the audio on Ben’s talk was completely absent until seven minutes and ten seconds in. The fact that this happened on a talk about audio/visual matters has not escaped us. In any case, Ben’s talk is still very much worth watching—most of it has perfectly fine audio and you can quite easily follow what he’s saying from his slides. Ben, you have our apologies in this regard.

Choose Carefully

Ben’s talk starts with fundamentals. He notes you need to understand your situation in exquisite detail to ensure you’re picking the correct format for the job. You need to think about what platform you’re using, how much processing you can do on the CPU, and how much RAM you have to spare for playback. There’s also the question of storage, too. Questions of latency are also important if your application is particularly time-sensitive, and you should also consider whether you’ll need to encode streams in addition to simply decoding them. Or, in simpler terms, are you just playing media, or are you recording it too? Finally, he points out that you should consider licensing or patent costs. This isn’t such a concern on small hobby projects, but it’s a big deal if you’re doing something commercially.

When it comes to picking an audio format, you’ll need to specify your desired bit rate, sample size, and number of channels. Metadata might be important to your application, too. He provides a go-to list of popular choices, from the common uncompressed PCM to the ubiquitous MP3. Beyond that, there are more modern codecs like AAC and Vorbis, as well as those for specialist applications like aLaw and uLaw.

Ben notes that MP3 is particularly useful these days, as its patents ran out in 2018. However, it does require a lot of software to decode, and can take quite a bit of hardware resources too (on the embedded scale, at least). Meanwhile, Opus is a great open-source format that was specifically designed for speech applications, and has low bitrate options handy if you need them.

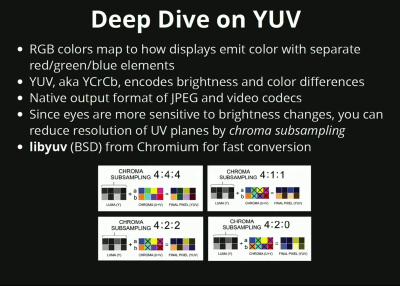

When it comes to video, Ben explains that it makes sense to first contemplate images. After all, what is video but a sequence of images? So many formats exist, from raw bitmaps to tiled formats and those relying on all kinds of compression. There’s also color formats to consider, along with relevant compression techniques like run-length encoding and the use of indexed color palettes. You’re probably familiar with RGB, but Ben goes through a useful explanation of YUV too, and why it’s useful. In short, it’s a color format that prioritizes brightness over color information because that’s what’s most important to a human viewer’s perception.

As for video formats themselves, there are a great many to pick from. Motion JPEG is one of the simplest, which is mostly just a series of JPEGs played one after another. Then there are the MPEG-1 and MPEG-2 standards from the 1990s, which were once widespread but have dropped off a lot since. H.264 has become a leading modern video standard, albeit with some patent encumbrances that can make it hard or expensive to use in some cases. H.265 is even more costly again. Standards like VP8, VP9, and AV1 were created to side step some of these patent issues, but with mixed levels of success. If you’re building a commercial product, you’ll have to consider these things.

Ben explains that video decoding can be very hardware intensive, far more so than working with simple images. Much of the time, it comes down to reference frames. Many codecs periodically store an “I-frame,” which is a fully-detailed image. They then only store the parts of the image that change in following frames to save space, before eventually storing another full I-frame some time later. This means that you need lots of RAM to store multiple frames of video at once, since decoding a later frame requires the earlier one as a reference.

Interestingly, Ben states that MPEG-1 is one of his favorite codecs at the moment. He explains its history as a format for delivering video on CD, noting that while it never took off in the US, it was huge in Asia. It has the benefit of being patent free since 2008. It’s also easy to decode with in C with a simple header called pl_mpeg. It later evolved into MPEG-2 which remains an important broadcast standard to this day.

The talk also crucially covers synchronization. In many cases, if you’ve got video, you’ve got audio that goes along with it. Even a small offset between the two streams can be incredibly off-putting; all the worse if they’re drifting relative to each other over time. Sync is also important for things like closed captions, too.

Ultimately, if you’re pursuing an audio or video project and you’ve never done one before, this talk is great for you. Rather than teaching you any specific lesson, it’s a great primer to get you thinking about the benefits and drawbacks of various media formats, and how you might pick the best one for your application. Ben’s guide might just save you some serious development time in future—and some horrible patent lawsuits to boot!