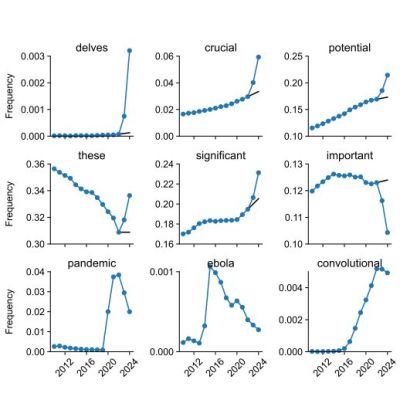

from 2021–22 to 2023–24. The first six words are affected by

ChatGPT; the last three relate to major events that influenced

scientific writing and are shown for comparison. (Credit: Kobak et al., 2024)

That students these days love to use ChatGPT for assistance with reports and other writing tasks is hardly a secret, but in academics it’s becoming ever more prevalent as well. This raises the question of whether ChatGPT-assisted academic writings can be distinguished somehow. According to [Dmitry Kobak] and colleagues this is the case, with a strong sign of ChatGPT use being the presence of a lot of flowery excess vocabulary in the text. As detailed in their prepublication paper, the frequency of certain style words is a remarkable change in the used vocabulary of the published works examined.

For their study they looked at over 14 million biomedical abstracts from 2010 to 2024 obtained via PubMed. These abstracts were then analyzed for word usage and frequency, which shows both natural increases in word frequency (e.g. from the SARS-CoV-2 pandemic and Ebola outbreak), as well as massive spikes in excess vocabulary that coincide with the public availability of ChatGPT and similar LLM-based tools.

In total 774 unique excess words were annotated. Here ‘excess’ means ‘outside of the norm’, following the pattern of ‘excess mortality’ where mortality during one period noticeably deviates from patterns established during previous periods. In this regard the bump in words like respiratory are logical, but the surge in style words like intricate and notably would seem to be due to LLMs having a penchant for such flowery, overly dramatized language.

The researchers have made the analysis code available for those interested in giving it a try on another corpus. The main author also addressed the question of whether ChatGPT might be influencing people to write more like an LLM. At this point it’s still an open question of whether people would be more inclined to use ChatGPT-like vocabulary or actively seek to avoid sounding like an LLM.